With the recent displays of Redmi Note7, Honor view 20, Huawei P20 Pro- a term is floating around the market. Pixel Binning. Some say it’s bad, some say it’s good, or some say it’s “too technical”. We try to represent you the method of pixel binning in layman’s words.

What is Pixel binning?

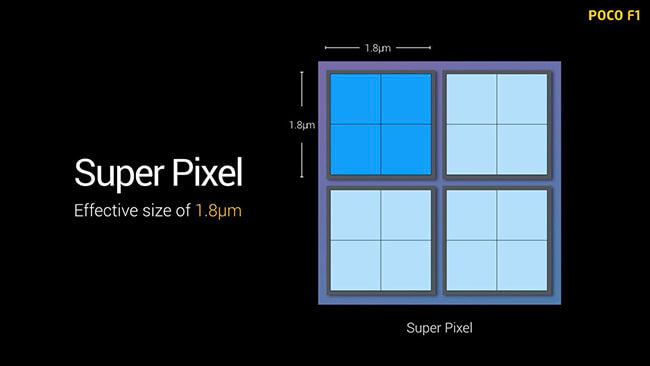

Obviously, this is the first question which comes to our minds. In simple words, pixel binning is a method or process of combining 4 small-sized pixels into 1 big pixel while capturing an image. For a small recap, pixels are the smallest part of an image containing information. What an atom is to an element, is the pixel to an image, as simple as that. Pixel binning takes 4 pixels and adds them together to create 1 super pixel.

Undoubtedly, the higher the size of the pixel, the more information is present in the image. So the ISP ( Image signal processor) of your processor which processes raw data and creates an image has more data to create a good looking image. Imagine yourself drawing a masterpiece with only 10 colors in your palette. Now imagine yourself drawing the same masterpiece with 100 colors. Now you have more choice, information and data to process and create a better drawing.

Why pixel binning?

There will be a limit of software. Beyond that, the software won’t be enough. To stay ahead of the competition and provide better images, brands need to provide better cameras. They can hire the best software teams but the software will always be limited by hardware. At some point, they’ll need to upgrade the hardware sensor. Without a doubt, hardware increase cost. Here, pixel binning comes to a temporary rescue. Pixel binning helps the camera sensor to squeeze the last drop of information and provides it to the ISP. As you all have already guessed by now, there is a limit of pixel binning too. After that, the hardware must be upgraded.

What is the process of pixel binning?

To know how pixel binning works, we need to first know how a sensor works.

First of all, there is a monochrome sensor. Remember those Honor phones having monochrome secondary sensors? Yeah, they are the one building the foundation for the primary sensor also.

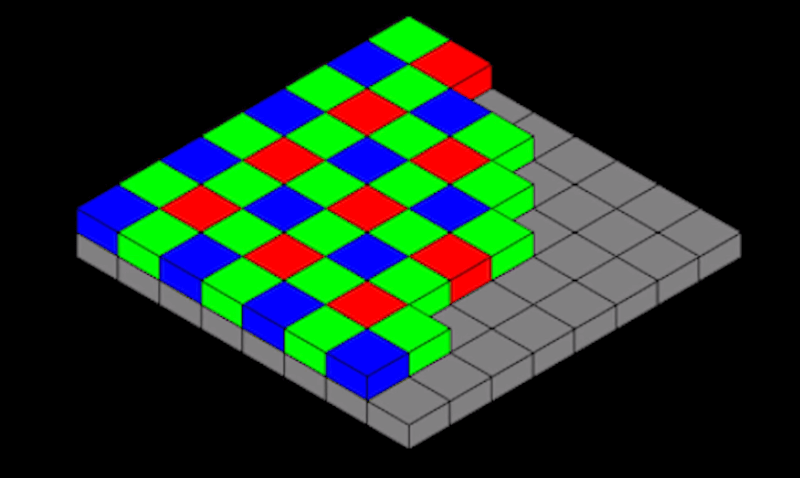

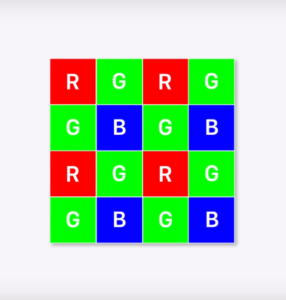

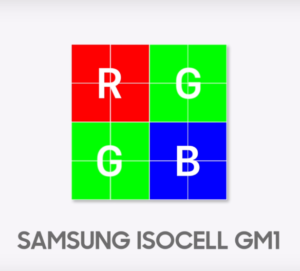

On top of it, there is a Bayer Filter. Bayer filter is a layer of 3 colors: Red Green Blue. It has grids of the 3 colors available for each individual pixels. 25% Red, 25% Blue and 50% Green is the combination used. Why 50% Green? Cause Green is more appealing to the human eye. Moreover, the human eye is more sensitive to the color Green.

When you press the shutter, the sensor captures data and passes it. The data then passes through the Bayer Filter and colors pop on the image. Then the ISP or Image Signal Processor analyzes the image, apply AI or any custom algorithm and creates the compressed image as JPG.

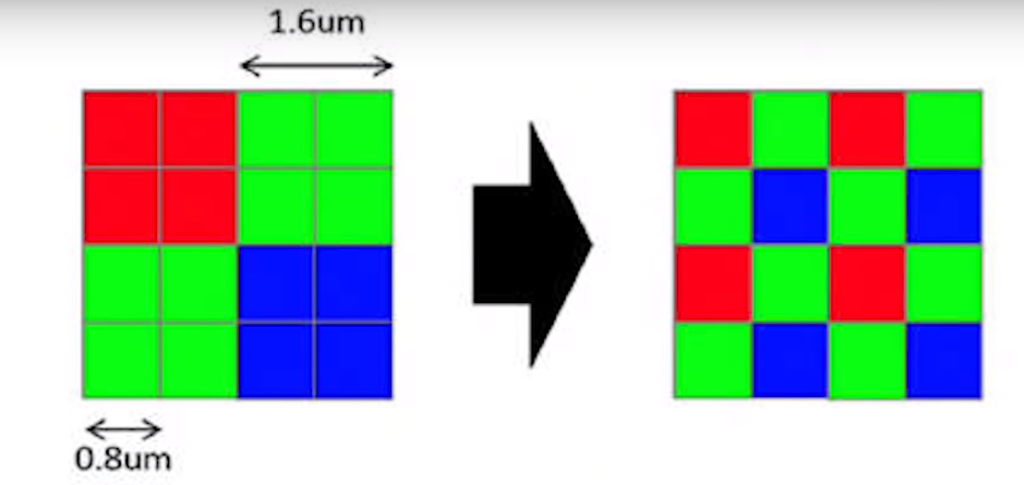

Pixel binning comes before JPG is created. 4 adjacent pixels are considered together to create a large pixel. Below we can see the pixel sizes of Redmi Note7 using pixel binning and obtaining better pixel sizes. Depending on how the sensor is configured, manufacturers could program it to perform 2×2 pixel binning, 2×1 binning (horizontal pixels) or 1×2 binning (vertical pixels). The latter two techniques are not being used in consumer imaging devices.

Comparison of a general sensor vs Samsung GM1 which uses pixel binning:

The benefit of Pixel Binning?

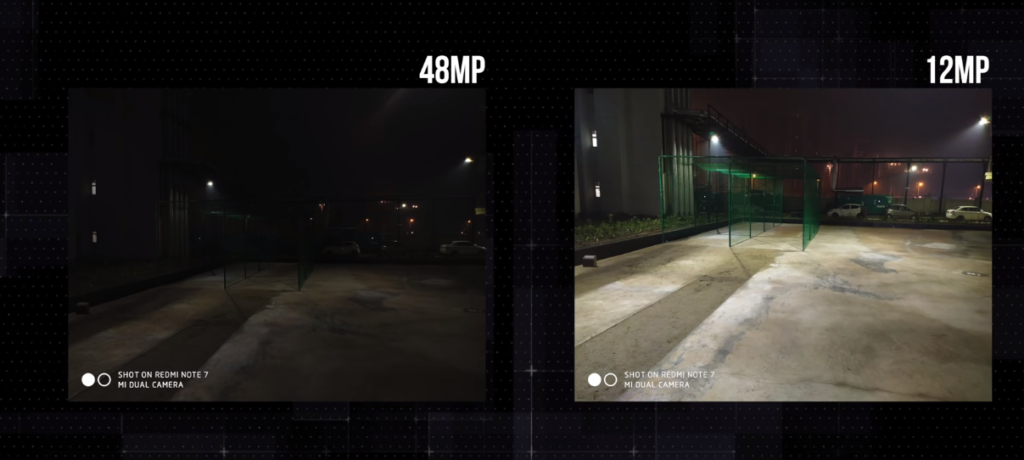

Pixel binning solves a prominent problem, low-light photography. Mobile sensors do not have enough radius to give space for the light to enter. As we already know, more the light the sensor has, better the image becomes. So, instead of using a pixel size of 1.2microns or similar(which is big considering mobile cameras), pixel binning takes 4 pixels of say 0.8microns and combines them to create a 1.6micron pixel which is bigger. Usually, cameras increase the ISO in low-light to capture more light. Whereas, a camera using pixel binning can do so without increasing ISO much. Because, along with brightening an image, increasing ISO increases noise. And hence, that can be avoided to a certain extent. In good light also, a better dynamic range is noticeable. Though, the practical difference is not much shocking in good lighting condition.

Sample Shots:

For more sample shots, check the Flickr Gallery by digit.in.

Is everything cool? Nah.

It reduces resolution to 1/4th. So a 12MP sensor would produce 3MP images. Isn’t that impressive, right? And to solve this, the sensor needs to have a higher resolution. Huawei used a 40MP sensor to create a 10MP image. Honor and Xiaomi used 48MP sensors to create a 12MP image. Lowering the resolution means actually loss of detail and low quality. Wait, I just read pixel binning increases quality? Yeah and that it does. A 12MP pixel binned image contain better quality than a native 12MP sensor. But that 12MP Pixel binned image doesn’t come close to a native 48MP sensor(seen in DSLRs).

What about RAW image and JPG Compression?

Another downside for developers and professionals are lack of raw files. In pixel binning, you either get a pixel-binned JPG or a non-pixel-binned RAW image. Pixel binned RAW image is what the ISPs cannot generate. Why so? The cause behind pixel binning is ISP and ISPs generate JPG images. The RAW image is the information before the ISP deals with it.

On top of everything, if the JPG compression is not done right, images might have a watercolor effect. If you’re thinking what is JPG compression, let us answer that also. JPG is a shareable and detectable by multiple sources’ file format. To create JPG, ISP compresses pixels to reduce size. Imagine, a 12MP RAW image will be of around 10-20MB depending on the sensor. JPG compression brings that down to 2-4MB. If the manufacturer uses a strict JPG compression, pictures will lose details and become blurry.

Current smartphones?

Currently, Redmi Note 7 uses pixel binning. Honor view 20, Huawei P20 Pro uses the same. These are the phones using pixel binning rapidly and compulsorily. Other than these, Xiaomi Mi A2, Redmi Y2, LG G7+ ThinQ uses the same techniques.

Let’s Conclude

Pixel Binning trades sensor resolution for better illumination in an image and truly comes in handy when shooting in low light. While you do lose 1/4th of the sensor resolution, you end up with images that are social media worthy. For example, if the sensor on the Huawei P20 Pro had a resolution of 16 or 20 megapixels (instead of 40) while maintaining its 1/2″ size, it would perform better than it is doing so today. Given the space constraints within a smartphone, the use of large format sensors becoming standard practice is very unlikely. Obviously, you don’t want a huge circle on the back of your phone just as a sensor, do you?

If we’ve been able to clear your doubts, share it with enthusiasts. Do comment if you still have some doubt left.